Software [In]security: BSIMM3

Measuring Software Security Initiatives Over Time

The third major release of the BSIMM project was published this month. The original study (March 2009) included 9 firms and 9 distinct measurements. BSIMM2 (May 2010) included 30 firms and 42 distinct measurements (some firms include very large subsidiaries which were independently measured). BSIMM3 includes 42 firms, eleven of which have been re-measured, for a total set of 81 distinct measurements.

Our work with the BSIMM model shows that measuring a firm's software security initiative is both possible and extremely useful. BSIMM measurements can be used to plan, structure, and execute the evolution of a software security initiative. Over time, firms participating in the BSIMM project show measurable improvement in their software security initiatives.

BSIMM3 Basics

The Building Security In Maturity Model (BSIMM, pronounced "bee simm") is an observation-based scientific model directly describing the collective software security activities of forty-two software security initiatives. Twenty-seven of the forty-two firms we studied have graciously allowed us to use their names. They are:

BSIMM3 can be used as a measuring stick for software security. As such, it is useful for comparing software security activities observed in a target firm to those activities observed among the thirty firms (or various subsets of the thirty firms). A direct comparison using the BSIMM is an excellent tool for devising a software security strategy.

We measure an organization by conducting an in-person interview with the executive in charge of the software security initiative. We convert what we learn during the interview into a BSIMM scorecard by identifying which of the 109 BSIMM activities the organization carries out. (Nobody does all 109.)

BSIMM3 Facts

BSIMM3 describes the work of 786 Software Security Group (SSG) members (all full time software security professionals) working with a collection of 1750 others in their firms to secure the software developed by 185,316 developers. On average, the 42 participating firms have practiced software security for five years and six months (with the newest initiative being eleven months old and the oldest initiative being sixteen years old in September 2011).

All forty-two firms agree that the success of their program hinges on having an internal group devoted to software security—the SSG. SSG size on average is 19.2 people (smallest 0.5, largest 100, median 8) with a "satellite" of others (developers, architects and people in the organization directly engaged in and promoting software security) of 42.7 people (smallest 0, largest 350, median 15). The average number of developers among our targets was 5183 people (smallest 11, largest 30,000, median 1675), yielding an average percentage of SSG to development of just over 1.99%.

That means in our study population we observe that on average there are two SSG members for every 100 developers. The largest SSG was 10% and the smallest was 0.05%.

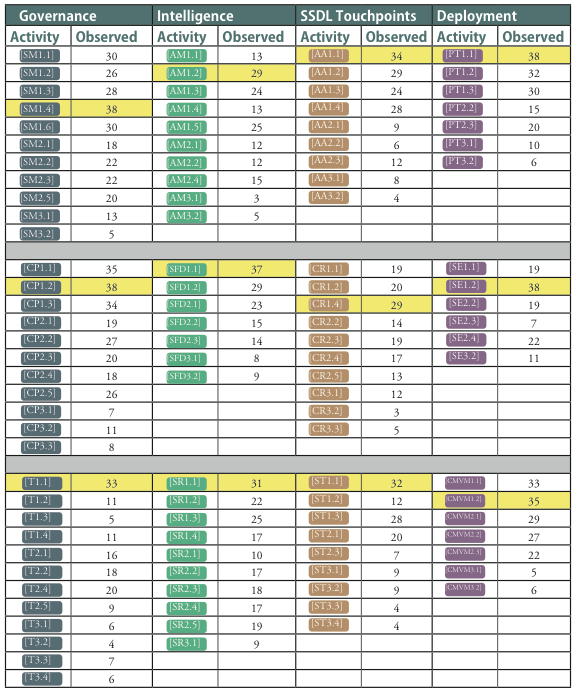

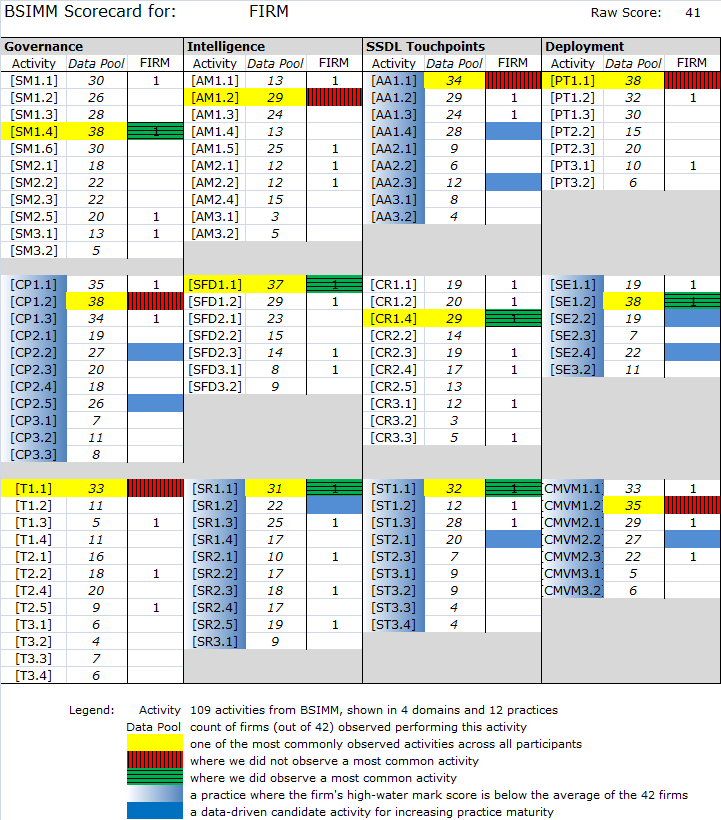

BSIMM3 describes 109 activities organized in twelve practices according to the Software Security Framework. During the study, we kept track of how many times each activity was observed in the forty-two firms. Here are the resulting data (to interpret individual activities, download a copy of the BSIMM document, which carefully describes the 109 activities) in figure 1. Note that each of the 109 activity descriptions was updated between BSIMM2 and BSIMM3.

Figure 1 (Click to enlarge.)

As you can see, twelve of the 109 activities are highlighted. These are the most commonly observed activities. We will describe them in some detail in a future article.

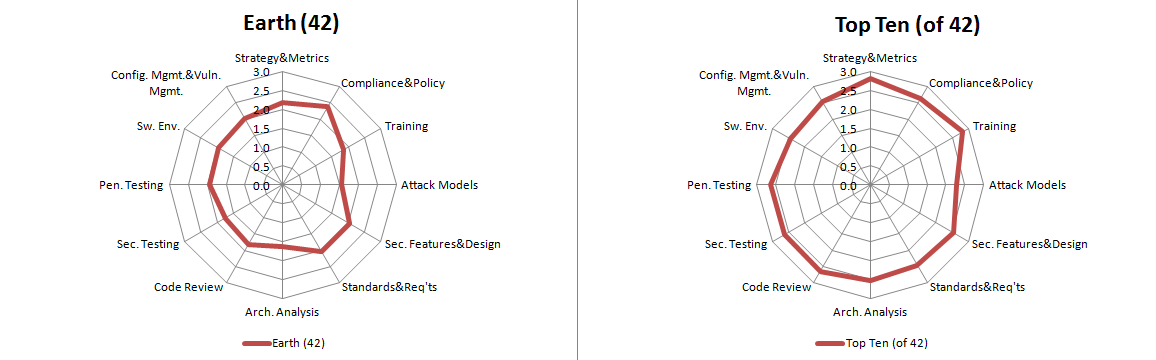

The BSIMM data yield very interesting analytical results. Figure 2 displays two "spider charts" showing average maturity level over some number of organizations for the twelve practices. The first graph shows data from all forty-two BSIMM firms. The second graph shows data from the top ten firms (as determined by recursive mean score computed by summing the activities recursively over practices and domains).

Spider charts are created by noting the highest level of activity in a practice for a given firm (a "high water mark"), and then averaging those scores for a group of firms, resulting in twelve numbers (one for each practice). The spider chart has twelve spokes corresponding to the twelve practices. Note that in all of these charts, level 3 (outside edge) is considered more mature than level 0 (inside point). Other more sophisticated analyses are possible, of course, and we continue to experiment with weightings by level, normalization by number of activities, and other schemes.

Figure 2 (Click to enlarge.)

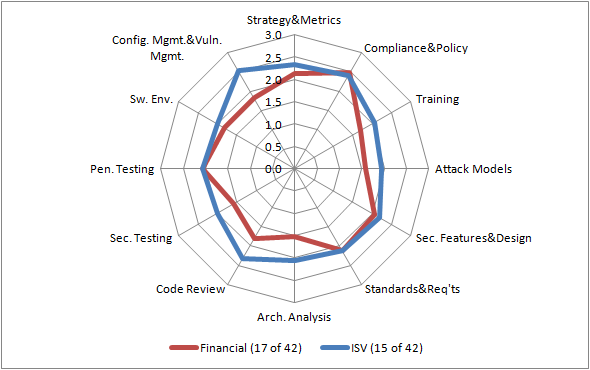

The spider charts we introduce above are also useful for comparing groups of firms from particular industry verticals or geographic locations. The graph below (figure 3) shows data from the financial services vertical (17 firms) and independent software vendors (15 firms) charted together. On average, independent software vendors have equal or greater maturity as compared to financial services firms in 11 of the 12 practices. We are not entirely surprised to see financial services come out ahead in Compliance and Policy because their industry is more heavily regulated.

Figure 3 (Click to enlarge.)

By computing a recursive mean score for each firm in the study, we can also take a look at relative maturity and average maturity for one firm against the others. To date, the range of observed scores is [9, 90]. We are pleased that the BSIMM study continues to grow (the data set has doubled since publication of BSIMM2 and quadrupled since the original publication). Note that once we exceeded a sample size of thirty firms, we began to apply statistical analysis yielding statistically significant results.

Measuring Your Firm with BSIMM3

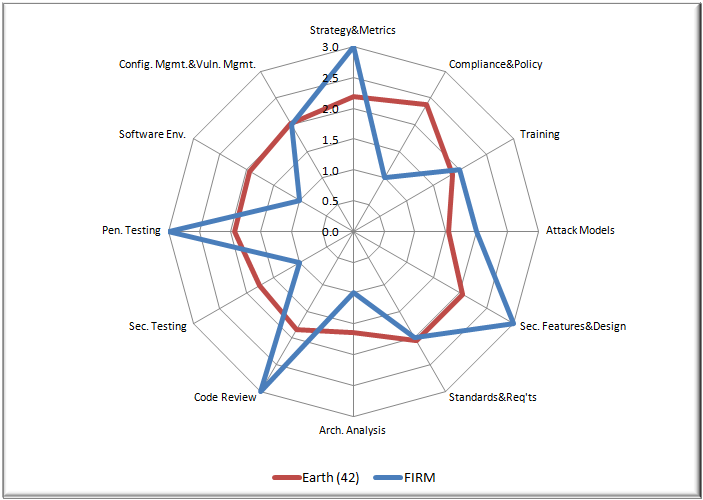

The most important use of the BSIMM is as a measuring stick to determine where your approach currently stands relative to other firms. Do this by noting which activities you already have in place, and using "activity coverage" to determine level and build a scorecard. In our own work using the BSIMM to assess levels, we found that the spider-graph-yielding "high water mark" approach (based on the three levels per practice) is sufficient to get a low-resolution feel for maturity, especially when working with data from a particular vertical or geography.

One meaningful comparison is to chart your own maturity high water mark against the averages we have published to see how your initiative stacks up. Below (figure 4), we have plotted data from a (fake) FIRM against the BSIMM Earth graph.

Figure 4 (Click to enlarge.)

A direct comparison of all 109 activities is perhaps the most obvious use of the BSIMM. This can be accomplished by building a scorecard using the data displayed above.

Figure 5 (Click to enlarge.)

The scorecard you see here depicts a (fake) firm performing 41 BSIMM activities (1s in the FIRM columns), including six of the twelve most commonly seen activities (green boxes). On the other hand, the firm is not performing the six other most commonly observed activities (red boxes) and should take some time to determine whether these are necessary or useful to its overall software security initiative. The Data Pool column shows the number of observations (currently out of 42) for each activity, allowing the firm to understand the general popularity of an activity amongst the 42 BSIMM participants.

Once you have determined where you stand with activities, you can devise a plan to enhance practices with other activities suggested by the BSIMM. By providing actual measurement data from the field, the BSIMM makes it possible to build a long-term plan for a software security initiative and track progress against that plan. For the record, there is no inherent reason to adopt all activities in every level for each practice. Adopt those activities that make sense for your organization, and ignore those that don't.

BSIMM as a Longitudinal Study

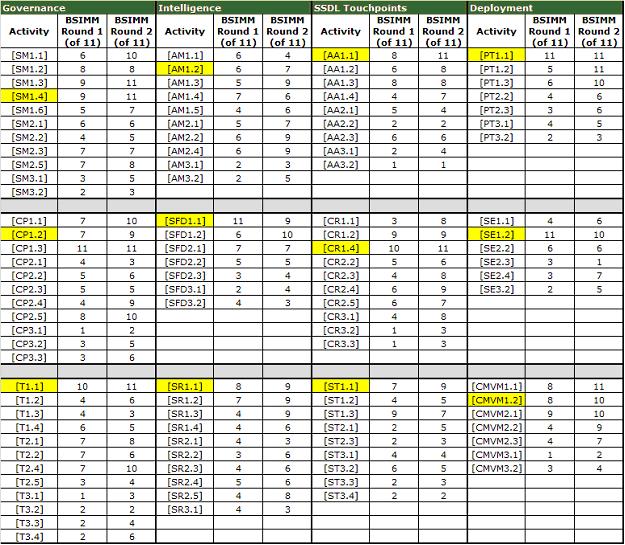

Eleven of the forty-two firms have been measured twice using the BSIMM measurement system. On average, the time between the two measurements was 19 months. Though individual activities among the 12 practices come and go as shown in the Longitudinal Scorecard below (figure 6), in general re-measurement over time shows a clear trend of increased maturity in the population of eleven firms re-measured thus far. The recursive mean score went up in ten of the eleven firms an average of 14.5 (a 32% average increase). Software security initiatives mature over time.

Figure 6 (Click to enlarge.)

Here are two ways of thinking about the change represented by the longitudinal scorecard. We see the biggest changes in activities such as PT1.2 (defect loop to developers), where six firms began executing this activity, and CP2.4 (SLAs), SR1.3 (compliance put into requirements), CR1.1 (a top N bugs list), and CMVM2.2 (track bugs through process), where five of the 11 firms began executing these activities. There are nine activities that four of the firms undertook.

Less obvious from the scorecard is the "churn" among activities. For example, while the sum count of firms remained the same for SM2.1 (publish data), four firms starting executing this activity while four firms stopped doing this activity. Similarly, three started and four stopped performing T1.3 (office hours) and four started and two stopped performing SM1.5 (identify metrics), T1.2 (onboarding training), and AM2.2 (technology attack patterns).

BSIMM Over Time

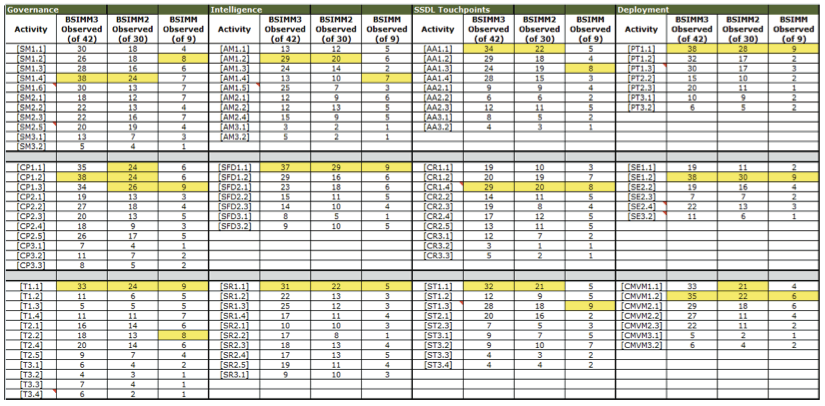

Observations between the three releases—BSIMM, BSIMM2, and BSIMM3—are shown side by side below (figure 7). The original model has retained almost all of its descriptive power even as the dataset has multiplied by a factor of 9. We did make a handful of very minor changes in the model between each release in order to remain true to our data first approach.

Figure 7 (Click to enlarge.)

The BSIMM Community

The 42 firms participating in the BSIMM Project make up the BSIMM Community. A moderated private mailing list allows SSG leaders participating in the BSIMM to discuss solutions with those who face the same issues, discuss strategy with someone who has already addressed an issue, seek out mentors from those are farther along a career path, and band together to solve hard problems (e.g., forming a BSIMM mobile security working group).

The BSIMM Community also hosts an annual private Conference where up to three representatives from each firm gather together in an off-the-record forum to discuss software security initiatives. In 2010, 22 of 30 firms participated in the BSIMM Community Conference hosted in Annapolis, Maryland. During the conference, we ran a workshop on practice/activity efficiency and effectiveness and published the results as an InformIT article titled Driving Efficiency and Effectiveness in Software Security.

The BSIMM website includes a credentialed BSIMM Community section where information from the Conferences, working groups, and mailing list initiated studies are posted.

If you are interested in participating in the BSIMM project as we work towards BSIMM4, please contact us through the BSIMM website.