One of the major goals that the 32 firms in the BSIMM study have in common is the desired ability to constantly adjust their software security initiatives in order to maximize efficiency and effectiveness. Put in simple terms, how do I decide on the right mix of code review, architecture risk analysis, penetration testing, training, measurement and metrics, and so on, and then how do I ensure I can react to new information about my software?

The problem is, nobody is sure how to actually solve this hard problem. In this article we take a run at the problem in the scientific spirit of the BSIMM project — gathering data first and finding out what the data have to say. We describe our findings and our data here in hopes that they may prove useful to other software security initiatives facing the effectiveness mountain.

Framing the Efficiency Question

As part of the BSIMM Community conference held in November in Annapolis, we ran a small data-gathering workshop. Our goal was to understand how to measure efficiency and effectiveness at the practice level. As quick review, here are the twelve practices of the Software Security Framework described in previous articles. We use the twleve practices to organize our data gathering.

| Governance | Intelligence | SDL Touchpoints | Deployment |

|---|---|---|---|

| Strategies and Metrics | Attack Models | Architechture Analysis | Deployment |

| Compliance and Policy | Security Features and Design | Code Review | Software Environment |

| Training | Standards and Requirements | Secuirty Testing | Configuration Management and Vulerability Management |

During the workshop, we split the 60 or so attendees into three groups and concentrated on gathering and discussing data based on three questions:

- What percentage of annual IT spend does the budget for your software security initiative represent?

- What is the associated percentage of annual software security initiative spend in each of the 12 practices (summed to 100)?

- How would you rank each of the 12 practices by importance to your current software security initiative?

Here are the results.

Software Security Spend in the Big Picture

Recall that question 1 is, what percentage of annual IT spend does the budget for your software security initiative represent? For software security spend as a portion of firm-wide IT spend, we collected data from eight firms with very active software security initiatives. These firms come from a number of diverse verticals. Their software security initiatives vary in maturity as assessed by the BSIMM measurement. The percentages of annual IT spend ranged from 0.13% to 5% with an average of 1.89%.

Remember, this is not security spend, but rather software security spend. Given the kinds of technologically intense businesses participating in the BSIMM, this is a healthy percentage.

How Much is Spent On Each Practice?

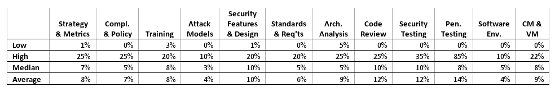

What is the associated percentage of annual software security initiative spend in each of the 12 SSF practices (summed to 100)? For software security spend per practice, we collected data from 14 firms. The following are the low, high, median, and average values per practice as reported or derived.

Figure 1 (Click to enlarge)

As you can see, the raw data themselves were very mixed, so we decided to analyze the dataset sorted by initiative age, maturity (in terms of BSIMM score), and development team size. We applied the following (somewhat arbitrary) buckets after sorting:

- Age Buckets: 0–2 years, 2.1–4 years, and 4.1+ years

- Maturity Buckets: 0–30 BSIMM activities, 31–59 BSIMM activities, 60+ BSIMM activities

- Dev team size Buckets: 0–800 developers, 801–5500 developers, 5501+ developers

Note that these buckets were chosen to divide the data set into approximately evenly-sized subsets.

Looking at the spend data through the Age lens, we see the following:

Figure 2 (Click to enlarge)

Perhaps the most interesting observation here is that effort in Penetration Testing starts out very high in young initiatives just getting started and decreases dramatically as software security initiatives get older. This makes intuitive sense since Penetration Testing is a great way to determine that you do, indeed, have a software security problem of your own. The problem is that Penetration Testing does little to solve the software security problem — that takes other activities.

In the data, there's also an interesting bulge in Architecture Analysis and Code Review in the middle "adolescent" bucket. It seems that after identifying the problem with Penetration Testing in the early years, initiatives begin to attack the problem with technical activities.

Practices in older organizations are more evenly balanced than young initiatives or adolescent initiatives. (Recall from BSIMM2 statistics that Age and Maturity do correlate.) This result reflects a preference for a well-rounded approach in older initiatives.

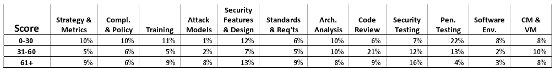

When we look at spending data through the Maturity lens, we see the following:

Figure 3 (Click to enlarge)

Once again, effort in Penetration Testing decreases dramatically as maturity scores rise, this time as the initiative BSIMM score grows. So it's not just older organizations that put away their Pen Testing toys, but also initiatives that have better software security initiatives as measured by the BSIMM.

Perhaps the most interesting observation here is that Code Review sees a major increase in the middle maturity bucket. This may reflect the effectiveness of the sales teams from static analysis vendors as much as it does the effectiveness of code review itself. Once again, though, we see firms in adolescent stage grasping for technological solutions to the software security problem.

Effort in Attack Models and in Security Testing increases fairly rapidly as initiatives mature. Practices in firms with higher BSIMM scores are more evenly balanced, or as we have come to call them in the BSIMM Community, "well rounded."

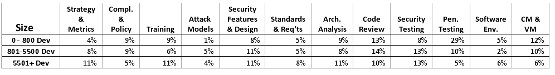

Finally, if we look at our spend data through the Dev Team Size lens (which we use as a proxy for firm size), we see the following:

Figure 4 (Click to enlarge)

Here we see a decrease in Penetration Testing reliance as firm size grows. There is also an increase in Strategy and Metrics as the number of developers increases. In general, size of firm does not seem to yield data as interesting as the other two lenses. This result suggests that development team size has little to do with selection of the "practice mix" for a software security initiative, which reinforces claims that there is no development organization too small to have a need of explicit software security activity.

In general, the most useful and actionable data revealed by question two can be found among high-maturity firms that have been practicing software security for a while. These are the firms to emulate and borrow ideas from.

How Do Practices Stack Up Against Each Other?

For BSIMM practices ranked by importance from 1 to 12, we received data from 14 firms and sliced the data from the perspectives of initiative age, maturity (in terms of BSIMM score), and development team size. We used the same buckets as above.

Overall, Strategy and Metrics received the most high rankings (i.e., the most ratings from 1 to 5). Software Environment was uniformly ranked as the least important practice. As an average of the rankings given by each firm, Training emerged as the most important practice.

Nothing particularly interesting emerged from the data when viewed through the Age lens.

When viewed through the Maturity lens, we see interesting data for Penetration Testing. For those firms with a BSIMM score of 30 or less or with 60 or more, Penetration Testing was ranked extremely low in importance (i.e., some 10's, 11's, and 12's). For the middle group with scores in the 31-59 range, Penetration Testing was uniformly rated as 1, 2, 3, or 4 in importance. Put in simple terms, Penetration Testing is a C+ strategy commonly found in the middle of the Bell curve.

When viewed through the Dev Team Size lens, the importance attributed to Strategy and Metrics increases significantly. That is, large firms require that plenty of governance activity be put in place just to make forward progress.

In general, cardinal importance data do not yield very interesting or useful results (especially relative to percentage spend data). We suggest using the spending data to drive effectiveness decisions over cardinal rankings among practices.

Well-Roundedness is Better Than Tent Poles

Basic BSIMM results that we have published before show that a well-rounded program (one that includes activities from all 12 of the practices) is the best way to proceed. The data from our workshop confirm that result and show that more mature firms that have been doing software security longer than their peers will adopt activities from across the entire BSIMM range.